transformers view markdown

Broad-ranging notes on papers involving transformers. Biased towards things I find cool - neuroscience, trees, and automatic science.

models

See related papers in the 📌 interpretability page.

high-performing

nlp (see also this link)

- early papers

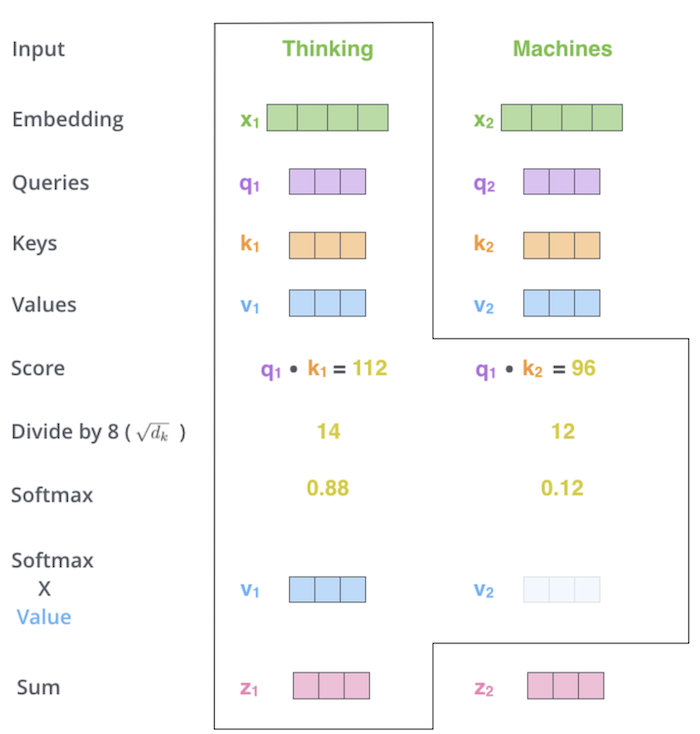

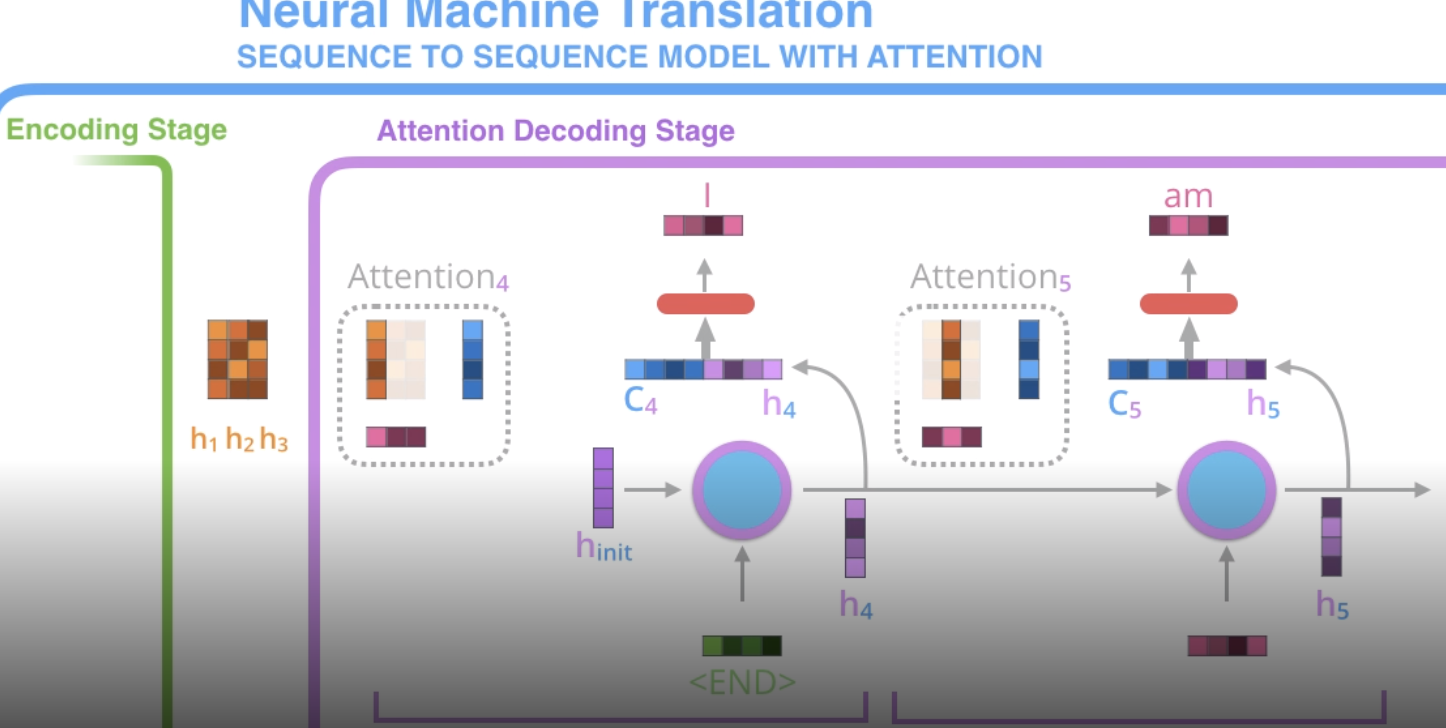

- attention is all you need (vaswani et al. 2017) - initial transformer

- encoder-decoder transformer for seq-to-seq (most new models don’t have special encoder-decoder structure for translation)

- Semi-supervised Sequence Learning (dai & quoc le, 2015)

- context vector is weighted sum of context vector at each word

- ULMFiT (howard & ruder, 2018)

- attention is all you need (vaswani et al. 2017) - initial transformer

- BERT (devlin et al. 2018) - semi-supervised learning (predict masked word - this is bidirectional) + supervised finetuning

- roberta (liu et al. 2019)

- BART (lewis et al. 2019) - generalizes BERT with sequence-to-squence training: train by (1) corrupting text then (2) reconstruct the original text

- ELMo (peters…zettlemoyer, 2018) - no word embeddings - train embeddings w/ bidirectional lstm (on language modeling)

- XLNet (yang…quoc le, 2020)

- GPT-4 (openai, 2023) - adds multimodal understanding + boosts context length to 32k

- GPT-3 (brown et al. 2020) - identitical to GPT-2 except larger and replaces dense attention with sparse attention

- sizes: largest has 175B params, 96 layers, 96 heads in each layer, head with dim 128, vocab size ~50k

- InstructGPT (ouyang…lowe, 2022)

- GPT-2 (radford et al. 2018)

- GPT (radford et al. 2018)

- Gopher (deepmind, 2021) - basically gpt-3 with slight mods (replace layernorm by RMSnorm, different positional embeddings)

- open-source (from meta ai): LlaMa 2, LLaMa, OPT-IML, OPT

- GPT4All (LLaMA 7B finetuned on code/stories/dialogue)

- GPT-3 (brown et al. 2020) - identitical to GPT-2 except larger and replaces dense attention with sparse attention

-

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators (clark…quoc le, chris manning, 2020)

- more efficient: rather than standard masked training, use generator-discriminator setup for “token detection”

- generator replaces many masked tokens with plausible samples - train with MLM

- discriminator tries to guess which tokens were the masked ones - this is the main model that gets used

- more efficient: rather than standard masked training, use generator-discriminator setup for “token detection”

- LongNet: Scaling Transformers to 1,000,000,000 Tokens (ding, …, wei, 2023) - multiscale attention similar to wavelets

- Longformer: The Long-Document Transformer (Beltagy, Peters, & Cohan 2020) - processes very long contexts

- Lost in the Middle: How Language Models Use Long Contexts (liu…petroni, liang, 2023)

- PaLM: Scaling Language Modeling with Pathways (Google 2022) - 540 Billion params

- pathways hardware center allows for fast/efficient training

- discontinuous improvements - at some point large model improves

- prompt engineering: “Explain yourself” - lets it explain jokes

- Chinchilla: Training Compute-Optimal LLMs (DeepMind 2022)

- “chinchilla scaling laws” - for compute-optimal training, the model size and the number of training tokens should be scaled equally

- T0 (sanh…rush, 2022) - multitask training enables better zero-shot generalization

- T5 (raffel…liu, 2020) – text-to-text transfer transformer

- UL2: Unifying Language Learning Paradigms (tay…metzler, 2022) - open-source 20B model, beats GPT-3 at zero-shot

-

early instruction following

- FLAN-PaLM: Scaling Instruction-Finetuned Language Models (chung, …, quoc le, jason wei, 2022) - finetune with datasets phrased as instructions

- FLAN (wei, …, le, 2021) - finetune on instructions to follows instructions

- FLAN-PaLM: Scaling Instruction-Finetuned Language Models (chung, …, quoc le, jason wei, 2022) - finetune with datasets phrased as instructions

- subquadratic attention

- MAMBA (gu & dao, 2023) - state-space model

- smaller newer models

- phi-1, phi-2

- mistral 7B, mixtral MoE

other

- text-vision models

- CLIP (radford et al. 2021) - jointly train text/images

- batch-based loss: encodings from same image/text pair should be close while encodings across different examples in the batch should be different

- note: empirically works better with very large batch size

- DALL-E 2 (OpenAI, 2022)

- clip is foundation as generative model

- generates text + image embeddings

- “prior network” maps text embedding to image embedding

- adds diffusion model

- Stable diffusion (stability.ai, 2022) - open-source recreation, now highly optimized for speed

- Imagen (google, 2022)

- BLIP-2 (salesforce, 2023) - Bootstrapping Language-Image Pre-training with Frozen Image Encoders and LLMs

- BEiT-3 (2022) - treat vision as language and large-scale multimodal training

- outperforms Flamingo: a Visual Language Model for Few-Shot Learning (2022), which uses more domain knowledge to connect vision & language

- video

- Text-To-4D Dynamic Scene Generation (meta, 2023)

- CLIP (radford et al. 2021) - jointly train text/images

- vision

- VIT: An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale (dosoviskiy, …, houlsby, 2020)

- attention augmentation to resnet for vision (bello…quoc le, 2020)

- here, people call image patches “tokens”

- DINO Emerging Properties in Self-Supervised Vision Transformers (caron…joulin, 2021)

- Masked Autoencoders Are Scalable Vision Learners (he…dollar, girshick, 2021) - BERT-style training

- speed up by not applying encoder to mask tokens + adding mask to a lot of the data (like 75%)

- really good results without much data

- spatial transformers networks (deepmind, 2015)

- VIT: An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale (dosoviskiy, …, houlsby, 2020)

- rl

- AdA: Human-Timescale Adaptation in an Open-Ended Task Space (deepmind, 2023)

- GATO: A Generalist Agent (deepmind, 2022) - single agent plays many different video games

- different modalities are converted to tokens differently (e.g. image patches are fed through resnet)

- In-context Reinforcement Learning with Algorithm Distillation (laskin, wang, …, sahni, satinder singh, mnih, 2022, deepmind) - learn to improve an RL algorithm

- put history of (observation, action, reward) sequences into context and then use them to predict new action given new observation

- Decision Transformer: Reinforcement Learning via Sequence Modeling (chen, lu, …abbeel, srinivas, mordatch, 2021) - transformer that predicts what the next highest reward step is instead of the next word

-

question-answering (now just done with generic LLMs)

- UnifiedQA: Crossing Format Boundaries With a Single QA System (khashabi…hajishirzi, 2020)

- dialog

- ChatGPT

- GODEL: Large-Scale Pre-Training for Goal-Directed Dialog (baolin peng, galley, …, gao , 2022) - add grounded pre-training

- Deal or No Deal? End-to-End Learning for Negotiation Dialogues (lewis…batra, 2017, Meta) - controversial paper where agents “make up their own language”

- this is pre-transformers

-

MINERVA: Solving Quantitative Reasoning Problems with Language Models (google, 2022) - train on well-parsed, domain-specific data (math arxiv) to solve math-reasoning problems

- autoformalization (wu…, szegedy, 2022) - translating from natural language math to formal language

- produce sql/python that then finds an answer (cheng…zettlemoyer, smith, yu, 2022)

- CODEX: Evaluating LLMs Trained on Code (2021, openai)

- Repair Is Nearly Generation: Multilingual Program Repair with LLMs (Joshi et al. 2022)

- Improving automatically generated code from Codex via Automated Program Repair (Fan et al. 2022) - use automated program repair to tweak codex outputs to make them better

- Generating Question Titles for Stack Overflow from Mined Code Snippets (Gao et al. 2020)

- Automatic Program Repair with OpenAI’s Codex: Evaluating QuixBugs (Prenner & Robbes, 2021)

- use prompt like:

### fix the bug in the following function <buggy function and/or docstring here> ### fixed function

- use prompt like:

- program synthesis arxiv.org/abs/2108.07732 - formalize natural language into runnable code

-

science

- Galactica: A LLM for Science (taylor…, stojnic, 2022, meta ai) - trained on mostly papers + some knowledge bases (e.g. proteins)

- Nougat: Neural Optical Understanding for Academic Documents (blecher…scialom, sojnic, 2023)

- Galactica: A LLM for Science (taylor…, stojnic, 2022, meta ai) - trained on mostly papers + some knowledge bases (e.g. proteins)

- music

- MusicLM: Generating Music From Text (google, 2023)

- Jukebox: A Generative Model for Music (openai, 2020)

-

summarization / keywords

- KeyBERT: Minimal keyword extraction with BERT (grootendorst, 2020)

-

text-to-speech

- Voicebox: Text-Guided Multilingual Universal Speech Generation at Scale (meta, 2023)

prompting

- https://github.com/dair-ai/Prompt-Engineering-Guide

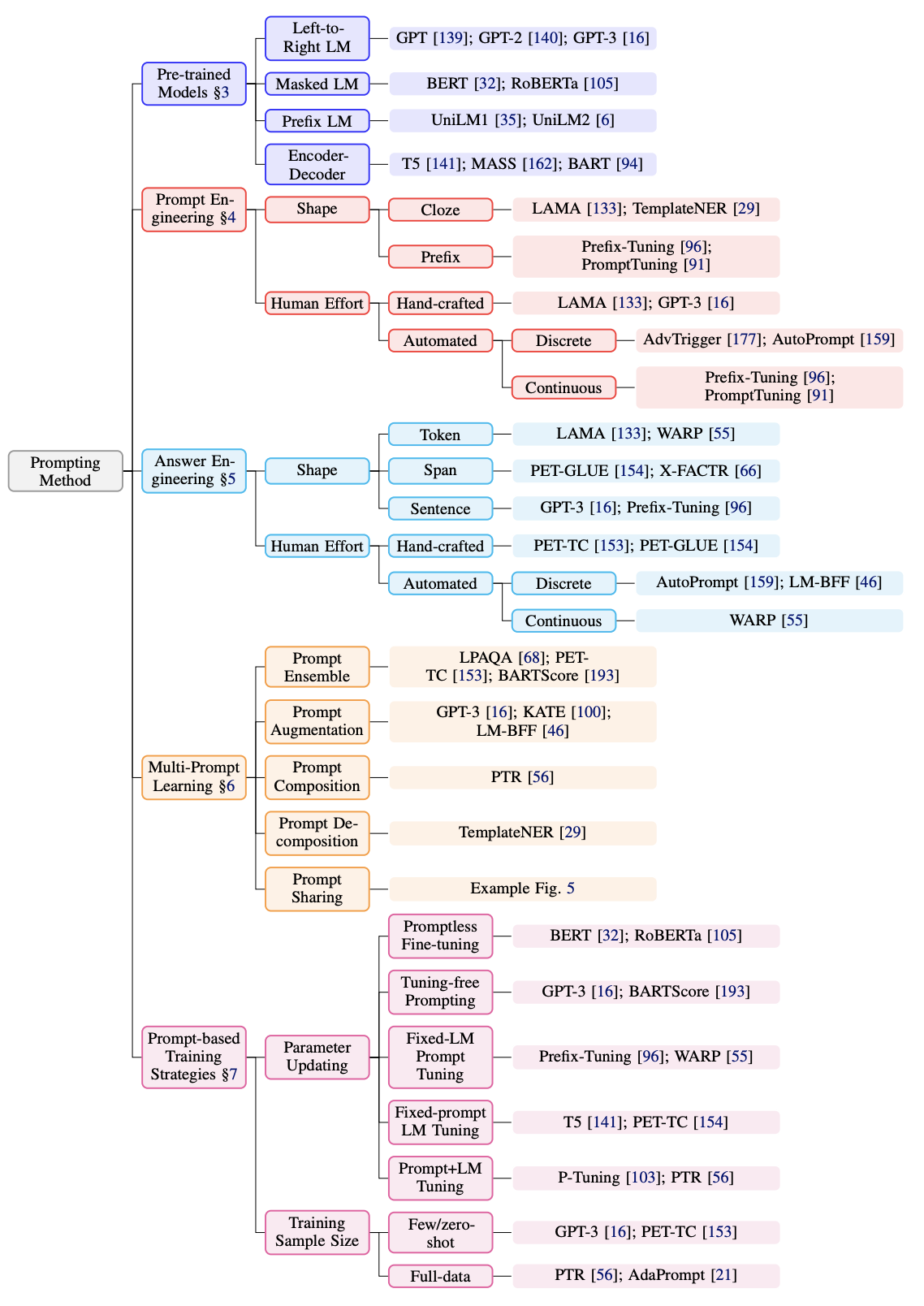

- Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing (liu…neubig, 2021)

- from feature-engineering -> architecture engineering -> prompt engineering

- early prompting papers

- LAMA Language Models as Knowledge Bases? (petroni…riedel, 2019) - Proposes using fill-in-the-blank (cloze) prompts for extracting knowledge from LLMs

- create LAMA probe - dataset of (subject, relation, object) triplets with templates – find that BERT can recall these relations

- How to Query Language Models? (adolphs et al. 2021) - query LLMs by example (e.g. “Ronaldo plays for Portugal. Who does Neuer play for?”)

- How Can We Know What Language Models Know? (jiang … neubig, 2020)

- mining-based and paraphrasing-based methods to automatically generate high-quality diverse prompts

- ensemble methods to combine answers from different prompts (e.g. avg logits and more)

- Noisy Channel Language Model Prompting for Few-Shot Text Classification (min et al. 2022)

-

Querying $P(question answer)$ with Bayes rule outperforms standard querying $P(answer question)$

- LAMA Language Models as Knowledge Bases? (petroni…riedel, 2019) - Proposes using fill-in-the-blank (cloze) prompts for extracting knowledge from LLMs

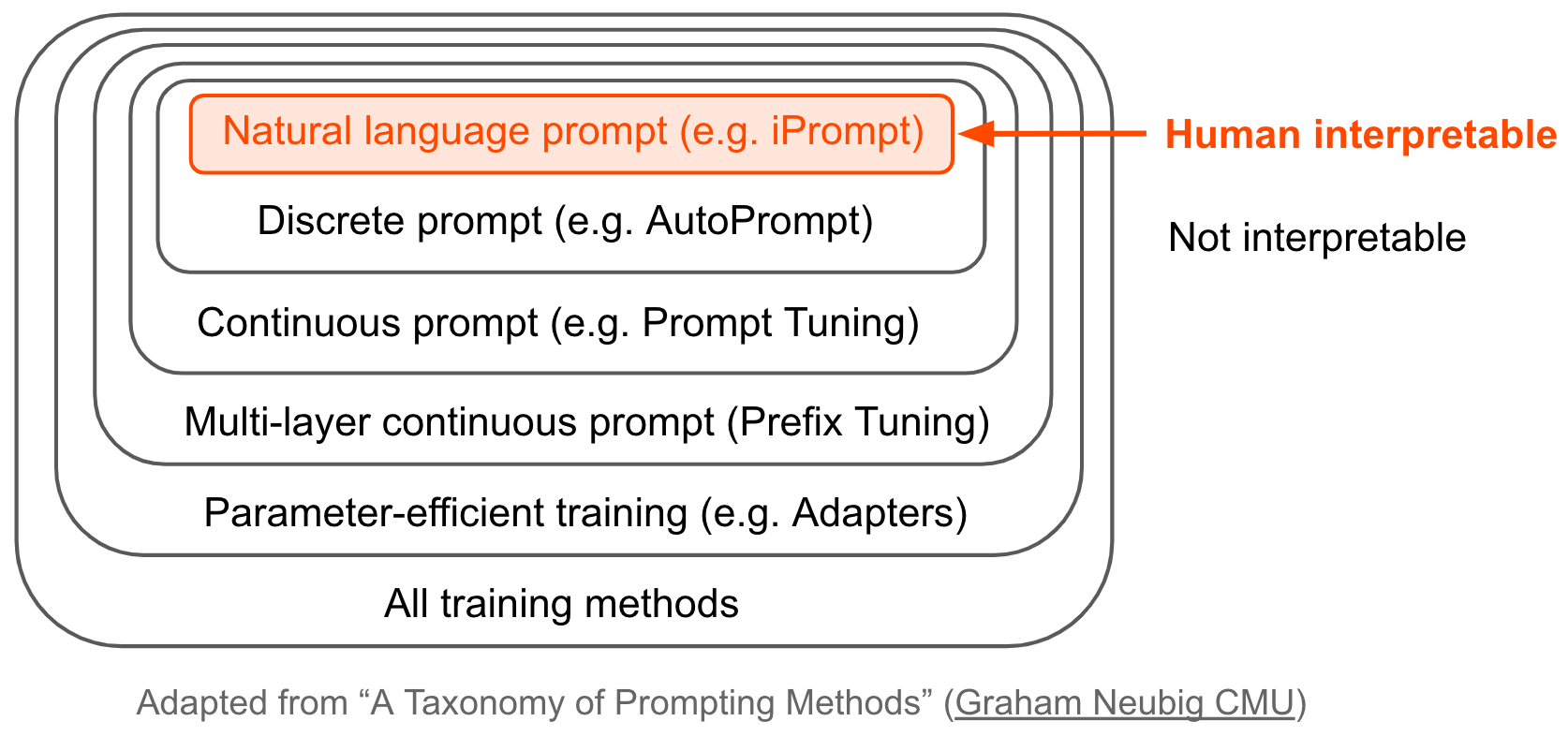

(auto)prompting

- natural-language prompting

- iPrompt: Explaining Patterns in Data with Language Models via Interpretable Autoprompting (singh, morris, …gao, 2022)

- APE: LLMs Are Human-Level Prompt Engineers (zhou…ba, 2022)

- similar to iPrompt, (1) propose prompt candidates with an LLM, (2) score the prompts by the accuracy they yield when using another LLM and (3) regenerate similar prompt candidates

- experiments on instruction induction datasets + truthful QA

- FluentPrompt: Toward Human Readable Prompt Tuning (shi, …, zettlemoyer, 2022) - use langevin sampling + fluency constraint to generate prompt

- experiments relatively weak: 3 sentiment datasets + autoprompt is the only baseline

- APO: Automatic Prompt Optimization with “Gradient Descent” and Beam Search (pryzant…zeng, 2023) - update prompts based on errors made by previous prompts

- OPRO: LLMs as Optimizers (yang…quoc le, zhou, & chen , 2023) - add in past prompts with their scores during optimization

- Promptbreeder: Self-Referential Self-Improvement Via Prompt Evolution (fernando…rocktaschel, 2023) - simultaneously improve prompts with LLM + improve the mutation-prompts the LLM uses to mutate the prompts

- Connecting LLMs with Evolutionary Algorithms Yields Powerful Prompt Optimizers (guo…yang, 2023)

- PromptAgent: Strategic Planning with LMs Enables Expert-level Prompt Optimization (wang…hu, 2023) - iterate on prompt errors using MC tree search

- Language Models as Black-Box Optimizers for Vision-Language Models (yu…pathak, & ramanan, 2023)

- discrete prompting

- AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts (shin…sameer singh, 2020)

- select prompts from a fixed set of tokens (resulting prompts are not coherent)

- only work on MLM

- elicit sentiment / factual knowledge

- Universal Adversarial Triggers for Attacking and Analyzing NLP (wallace…sameer singh, 2019) - find input-agnostic sequences of tokens that trigger a model to produce a specific prediction when concatenated to any input from a dataset

- RLPrompt: Optimizing Discrete Text Prompts with Reinforcement Learning (deng…hu, 2022)

- LM-BFF: Making Pre-trained Language Models Better Few-shot Learners (gao et al. 2020) - uses T5 to generate (i) template for the task (which might include a whole example or two) + (i) appropropriate label tokens in the vocabulary for the task (suffers from computationally intensive search + sub-optimal discrete space search)

- PADA: Example-based Prompt Learning for on-the-fly Adaptation to Unseen Domains (ben-david, …, reichart, 2022)

- AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts (shin…sameer singh, 2020)

- continuous prompt optimization

- Prefix-Tuning: Optimizing Continuous Prompts for Generation (li & percy liang, 2021) – optimizes in continuous space for language generation tasks

- learn to map some parameters $\theta$ through and MLP to generate a starting hidden state $h_i$ – never actually sends the prefix through the network

- P-Tuning: GPT Understands, Too (liu et al. 2021) – use LSTM to generate prompt embeddings (don’t map to tokens)

- Control Prefixes for Parameter-Efficient Text Generation (clive, cao, & rei, 2022) - allow for adapting the prefix to each input example

- DART: Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners (zhang…chen, 2022)

- reformulating NLP task into differentially optimizing the prompt template + target label (given a pre-trained model)

- focus on smaller models (Roberta-large + GPT-2) + few training shots

- fluency constraint to ensure association among prompt embeddings

- DART: Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners (zhang…chen, 2022)

- WARP: Word-level Adversarial ReProgramming (Hambardzumyan et al. 2021) - add continous tokens + some task-specific parameters for better generalization

- KnowPrompt: Knowledge-aware Prompt-tuning with Synergistic Optimization for Relation Extraction (Chen et al. 2021) – incorporate relations, visualize learned prompt vectors with t-SNE

- Prefix-Tuning: Optimizing Continuous Prompts for Generation (li & percy liang, 2021) – optimizes in continuous space for language generation tasks

- critiques of prompting

- Do Prompt-Based Models Really Understand the Meaning of their Prompts? (webson & pavlick, 2022) - models can learn fine with prompts that are intentionally irrelevant

- Are Language Models Worse than Humans at Following Prompts? It’s Complicated (webson, …, pavlick, 2023)

- Fantastically ordered prompts and where to find them: Overcoming few-shot prompt order sensitivity (lu…riedel, stenetorp, 2021)

- Quantifying Language Models’ Sensitivity to Spurious Features in Prompt Design or: How I learned to start worrying about prompt formatting (sclar, choi…, suhr, 2023)

- Do Prompt-Based Models Really Understand the Meaning of their Prompts? (webson & pavlick, 2022) - models can learn fine with prompts that are intentionally irrelevant

- misc

- Context-faithful Prompting for LLMs (zhou, shang, poon & chen, 2023) - ask question in clever way to force LLM to follow it

- SentiPrompt: Sentiment Knowledge Enhanced Prompt-Tuning for Aspect-Based Sentiment Analysis (Zhang et al. 2021) - use sentiment knowledge penalties in the prompt

- Meta-learning via Language Model In-context Tuning (Chen et al. 2022) - given new task with new instruction

- Prompt Programming for LLMs: Beyond the Few-Shot Paradigm (Reynolds & McDonell, 2021) - define metaprompts as general wrappers around tasks e.g. “This problem asks us to”

- Re3: Generating Longer Stories With Recursive Reprompting and Revision (Yang et al. 2022) - generate summaries, then expand and revise with prompts

- Directional Stimulus Prompting (li, baoling peng, …jianfeng gao, xifeng yan, 2023) - generate hint keywords using small LLM that are put into the prompt when calling large LLM

- memory-assisted prompt-editing (madaan…yang, 2022) - allows model to “save things to memory” that get added to prompt when needed

- Prompting Is Programming: A Query Language For LLMs (Beurer-Kellner, Fischer, & Vechev, 2022)

- can benefit from training for promptability

- Adapting Language Models for Zero-shot Learning by Meta-tuning on Dataset and Prompt Collections (zhong…klein, 2021)

- Continued Pretraining for Better Zero- and Few-Shot Promptability (wu…sameer singh, beltagy, 2022)

llm chaining / decoding

many notes are from this thread on chaining models together

- overviews

- Ai chains: Transparent and controllable human-ai interaction by chaining LLM prompts (wu, terry, & cai, 2022) - chaining LLM steps together: output of one step becomes the input for the next

- interactive system where users can modify chains + their intermediate results – improves performance + human experience

- Language Model Cascades (dohan…sutton, 2022) - treat chaining models as probabilistic programs

- use a probabilistic-programming language (PPL) to define a joint probability model on string-valued random variables, parameterized using LMs, and then condition this model on string-valued observations in order to compute a posterior over string-valued unknowns

- self-PPLs extend probabilistic graphical models to support more complex joint distributions whose size and “shape” can itself be stochastic

- e.g., a graph unrolled for a random number of iterations, until a data-dependent stopping criterion is met

- variables are all text: questions $Q$, answers $A$, and intermediate thoughts $T$

- Ai chains: Transparent and controllable human-ai interaction by chaining LLM prompts (wu, terry, & cai, 2022) - chaining LLM steps together: output of one step becomes the input for the next

- posthoc

- understanding chain-of-thought and its faithfulness

- Faithful Chain-of-Thought Reasoning (yu et al. 2023)

- Contrastive Chain-of-Thought Prompting (chia…bing, 2023)

- Program of Thoughts Prompting: Disentangling Computation from Reasoning for Numerical Reasoning Tasks (chen et al. 2022)

- Critiques

- Do Models Explain Themselves? Counterfactual Simulatability of Natural Language Explanations (chen, zhong, …, steinhardt, yu, mckeown, 2023)

- Benchmarking and Improving Generator-Validator Consistency of Language Models (lisa li…liang, 2023)

- The Unreliability of Explanations in Few-shot Prompting for Textual Reasoning (ye & durrett, 2022)

- Language Models Don’t Always Say What They Think: Unfaithful Explanations in Chain-of-Thought Prompting (turpin, …, bowman, 2023)

- CoT explanations can be heavily influenced by biasing the model towards certain answers, thereby yielding invalid explanations

- try biasing in 2 ways: answer is always (A), or setting where prompt suggests a certain answer

- Two Failures of Self-Consistency in the Multi-Step Reasoning of LLMs (chen, …, bowman, cho, 2023) - models fail at these 2 tasks:

- hypothetical consistency (the ability for a model to predict what its output would be in a hypothetical other context)

- compositional consistency (consistency of a model’s outputs for a compositional task even when an intermediate step is replaced with the model’s output for that step)

- Do Models Explain Themselves? Counterfactual Simulatability of Natural Language Explanations (chen, zhong, …, steinhardt, yu, mckeown, 2023)

- faithfulness metric = model sensitivity to removing some of the explanation

- Question Decomposition Improves the Faithfulness of Model-Generated Reasoning (anthropic, 2023) - introduce factored decomposition to improve faithfulness metric

- Measuring Faithfulness in Chain-of-Thought Reasoning (anthropic, 2023) - in addition to just removing some of the explanation, also add mistakes to it / paraphrase it

- larger models become less faithful by this metric

- Logical Satisfiability of Counterfactuals for Faithful Explanations in NLI (sia…zettlemoyer, mathias, 2023)

- Towards Consistent Natural-Language Explanations via Explanation-Consistency Finetuning (chen…gao, 2024)

- Measuring and Improving Attentiveness to Partial Inputs with Counterfactuals (elazar…sameer singh, noah smith, 2023)

- Faithful Explanations of Black-box NLP Models Using LLM-generated Counterfactuals (gat…reichart, 2023)

- Counterfactually Aware Fair Text Generation (banerjee…bhatia, 2023)

- Causal Proxy Models for Concept-based Model Explanations (wu…potts, 2023)

- Evaluating Models’ Local Decision Boundaries via Contrast Sets (gardner…zhou, 2020)

- Are LLMs Post Hoc Explainers? (kroeger…lakkaraju, 2023)

- Followups to Chain of Thought Prompting (wei et al. 2022)

- in few-shot prompts, don’t just provide answer but also reasoning

- model output then provides reasoning + answer

- Self-Discover: LLMs Self-Compose Reasoning Structures (zhou…le…zheng, 2024) - LLMs come up with their own step-by-step structure for a task

- Self-Consistency Improves Chain of Thought Reasoning in Language Models (wang, wei, schuurmans, quoc le, … zhou, 2022) - use output samples rather than greedy and return the most consistent final answer in the set

- Challenging BIG-Bench Tasks and Whether Chain-of-Thought Can Solve Them (suzgun, …, quoc le, …, jason wei, 2022)

- self-ask (Press et al., 2022) - LLM asks itself (and then answers) follow-up questions before answering the initial question

- Text Classification via LLMs (sun…wang, 2023) - add clues to the prompt

- Let’s Do a Thought Experiment: Using Counterfactuals to Improve Moral Reasoning (ma, …, chen, 2023) - counterfactuals help improve CoT

- RCOT: Detecting and Rectifying Factual Inconsistency in Reasoning by Reversing Chain-of-Thought (xue et al. 2023)

- SelfCheck: Using LLMs to Zero-Shot Check Their Own Step-by-Step Reasoning (miao, teh, & rainforth, 2023)

- EchoPrompt: Instructing the Model to Rephrase Queries for Improved In-context Learning (mekala…sameer singh, 2023) - replace let’s think step by step with Let’s repeat the question and also think step by step

- scratchpads Show Your Work: Scratchpads for Intermediate Computation with Language Models (nye et al. 2021)

- selection inference (creswell et al. 2022) - generate set of facts, then iteratively generate inferences from the facts to yield the final answer

- least-to-most prompting (zhou…quoc le et al. 2022) - prompt LLM with context showing how to reduce into subproblems; then LLM sequentially solves the subproblems, using the previous answers

- Generated Knowledge Prompting for Commonsense Reasoning (liu…hasjishirzi, 2021) - generate knowledge from an LLM then provide it as additional input when answering a question

- maieutic prompting (jung et al. 2022) - generate a tree of all explanation of the form “True, because…”, “False, because…” then query LLM with these as prompts

- then use Max-SAT to try to satisfy as many relations between the model explanations as possible to come up with the true answer

- LM vs LM: Detecting Factual Errors via Cross Examination (cohen et al. 2023)

- Thread of papers combating hallucination

- training

- verifiers (cobbe et al. 2021) - train model to judge whether an answer and thought are likely to be “valid”

- subgoal search (czechowski et al. 2021) - train model to generate subgoals then solve them in a graph

- STaR “Self-taught reasoner” (zelikman…goodman, 2022)

- first, finetune on observed $(Q, T, A)$ triplets, where $T$ is a rationale

- then, impute unknown $T_i$ given dataset of pairs $(Q_i, A_i)$ by sampling until finding a $T_i$ which leads to the correct answer

- robotics-specific

- zero-shot planning (huang, abbeel, pathak, & mordatch, 2022)

- socratic models

- Inner Monologue

- global workspace

- tree-related

- Aug-tree (singh, askari, caruana, & gao, 2023)

- Tree-prompting (morris, singh, rush, gao, & deng, 2023)

- Interpretable-by-Design Text Classification with Iteratively Generated Concept Bottleneck (ludan…callison-burch, 2023)

- tree of thoughts (yao et al. 2023) - LLM generates a tree of intermediate answers and perform steps such as backtracking

- Graph of Thoughts: Solving Elaborate Problems with LLMs (besta, .., hoefler, 2023) - allows merging/looping in the tree, e.g. for sorting

- frugalGPT (chen, zaharia, & zou, 2023)

- 3 components

- prompt adaptation - identify effective / shorter prompts (e.g. less demonstrations)

- LLM approximation - create simpler/cheaper LLMs

- LLM cascade - adaptively choose LLM based on query

- train “generation scoring function” - returns reliability score from 0 to 1 for each (question, answer)

- router sequentially proceeds through LLM APIs, returning the answer if the reliability score is high enough

- frugalML (chen, zaharia, zou, 2020) - tradeoff performance with budget for sequential cascade of API calls for single label

- FrugalMCT (chen, zaharia, zou, 2022) - extends to multilabel

- 3 components

- EcoAssistant: Using LLM Assistant More Affordably and Accurately (zhang…awadallah, wang, 2023) - answer code-driven queries efficiently using code executor + cascade of increasingly complex LLMs

- decoding

- Greedy - iteratively pick highest-probability token

- Nucleus sampling: The Curious Case of Neural Text Degeneration (holtzman…choi, 2019)

- Contrastive decoding (li et al. 2022) - decode based on the difference between a large and small LLM

- Context-aware decoding (shi, …zettlemoyer, yih, 2023) - the difference between the output probabilities when a model is used with and without context

- DoLa: Decoding by Contrasting Layers Improves Factuality in LLMs (chuang…he, 2023) - contasting later layers with early layers can improve truthfulness

- Calibrate Before Use: Improving Few-Shot Performance of Language Models (zhao, …, dan klein, sameer singh, 2021) - to make prompting easier, first calibrate output distr by making it uniform when given null inputs, e.g. “N/A”

- Contrastive decoding (li et al. 2022) - decode based on the difference between a large and small LLM

- Minimum Bayes Risk Decoding (suzgun, …, jurafsky, 2022) or (freitag et al. 2022)

- A Frustratingly Simple Decoding Method for Neural Text Generation (yang, …, shi, 2023) - build an anti-LM based on previously generated text and use this anti-LM to penalize future generation of what has been generated

- fast decoding

- KV caching + some other tricks - if repeatedly using the same tokens at the beginning of the context, can cache the KV vectors for those tokens

- KV caching trades off speed with memory

- speculative decoding (leviathan, kalma, & matias, 2022) - decode multiple tokens in parallel with small model, potentially skipping steps for the large model

- KV caching + some other tricks - if repeatedly using the same tokens at the beginning of the context, can cache the KV vectors for those tokens

- early exit - popular way to speed up inference

- Multi-exit vision transformer for dynamic inference (Bakhtiarnia, A., Zhang, Q. and Iosifidis, A., 2021)

- early layers have large activation map so early exist classifier must be complex

- solution: ViT class token allows early-exit classifier to have constant complexity

- DeeBERT: Dynamic early exiting for accelerating BERT inference (xin…lin, 2020)

- Multi-exit vision transformer for dynamic inference (Bakhtiarnia, A., Zhang, Q. and Iosifidis, A., 2021)

- understanding chain-of-thought and its faithfulness

- prompt ensembles

- liu…neubig, 2023 review discusses different strategies for ensembling prompts, e.g. averaging, weighted averaging

- black-box querying

- Tree-Prompting (morris…deng, 2023)

- PromptBoosting: Black-Box Text Classification with Ten Forward Passes (hou, …, jacob andreas, …, zhang, 2022) - get a small pool of prompts, learn a verbalizer (final classification layer) for each, then ensemble them with AdaBoost on LLM output

- people have studied many works on prompt ensembling (e.g. lester et al. 2021)

- Boosted Prompt Ensembles for LLMs (pitis…ba, 2023) - similar but use CoT-style prompts and tasks, e.g. GSM8k

- PREFER: Prompt Ensemble Learning via Feedback-Reflect-Refine (zhang…cai, 2023) - builds set of prompts dynamically rather than assuming they’re fixed

- PTR: Prompt Tuning with Rules for Text Classification (han et al. 2021) – use logic rules to construct prompts with sub-prompts for many-class text classification (prompt is constructed hierarchically, but only one call is made to the LLM for inference)

- soft prompts

- Learning How to Ask: Querying LMs with Mixtures of Soft Prompts (Qin & Eisner, 2021) - learn a mixture of soft prompts using gradient descent

- require model retraining

- PRBOOST: Prompt-Based Rule Discovery and Boosting for Interactive Weakly-Supervised Learning (zhang…zhang, 2022) - iteratively (1) select high-error examples, (2) have human label them as rules, and (3) use boosting to train model on the new rules + ensemble

- typical rule generation

- Snuba (Varma and Ré, 2018) generates heuristics based on a small labeled dataset with pre-defined rule types

- TALLOR (Li et al. 2021a) & GLaRA (Zhao et al. 2021) study rule expansion for NER problem based on lexical information and then select rules based on a hand-tuned threshold

- Prompt ensembling / selection without labels

- Zero-Label Prompt Selection (liao, zheng, & yang, 2022) - use prompts to label unlabeled data and then select prompts using these labels

- A Simple Zero-shot Prompt Weighting Technique to Improve Prompt Ensembling in Text-Image Models (alingham…lakshminarayanan, 2023) - use confidence (max output logit) after appropriate normalization as weight

- self-verification

- review on self-verification (pan…wang, 2023)

- Self-Refine: Iterative Refinement with Self-Feedback (madaan, …, clark, 2023)

- Self-Verification Improves Few-Shot Clinical Information Extraction (gero et al. 2023)

- SelfCheckGPT: Zero-Resource Black-Box Hallucination Detection for Generative LLMs (manakul…gales, 2023)

llm querying / causal inference

- Can LLMs Infer Causation from Correlation? (jin…scholkopf, 2023) - introduce Corr2Cause dataset (must infer causal graph from correlational statements), doesn’t test pre-existing knowledge

- Causal Reasoning and LLMs: Opening a New Frontier for Causality (kiciman…tan, 2023)

- LLMs to be used alongside existing causal methods, as a proxy for human domain knowledge and to reduce human effort in setting up a causal analysis

- cause-effect pairs, LLM has to discover from graph (tubingen benchmark, neuropathic pain, etc.)

- LLMs to be used alongside existing causal methods, as a proxy for human domain knowledge and to reduce human effort in setting up a causal analysis

- Causal Inference in Natural Language Processing: Estimation, Prediction, Interpretation and Beyond (feder…vetich, diyi yang, 2022)

- Zero-shot causal learning (nilforoshan…leskovec, 2023)

- Discovering Latent Knowledge in Language Models Without Supervision (burns, ye, klein, & steinhardt, 2022) - identify whether text is true or false directly from a model’s unlabeled activations

- Inference-Time Intervention: Eliciting Truthful Answers from a Language Model (li…pfister, wattenberg, 2023)

- InferBERT: A Transformer-Based Causal Inference Framework for Enhancing Pharmacovigilance (wang…liu, 2021) - learn + test feature relationships from attention weights

- CausaLM: Causal Model Explanation Through Counterfactual Language Models (2021) - produce example-level causal model explanations using models finetuned on auxiliary adversarial tasks derived from the causal graph of the problem

- Investigating Gender Bias in Language Models Using Causal Mediation Analysis (vig, …, shieber, 2020)

- Applies causal mediation analysis to identify decisive neurons and attention heads responsible for gender bias in LLMs

- Identifies a small handful of decisive attention heads in this case

- Amnesic Probing: Behavioral Explanation with Amnesic Counterfactuals (elazar, …, goldberg, 2021) - measure the importance of specific info within a model by introducing a causal intervention to erase that information, then observing the causal effects

- TrustLLM (sun…zhao, 2024) - evaluation and benchmark of many aspects of trustworthiness (github)

- What Evidence Do Language Models Find Convincing? (wan, wallace, & klein, 2024) - rather than relying on facts, LLMs largely rely on textual similarities in evidence to decide whether it’s important

- Deductive Closure Training of Language Models for Coherence, Accuracy, and Updatability (aykurek…andreas, 2024) - LMs generate additional text implied by documents, reason about the generated text, and finetune on the correct text

- LMs’ reasoning capabilities during inference can be leveraged during training to improve their reliability

- uncertainty

- Semantic Uncertainty (kuhn, gal, & farquhar, 2023) - instead of calculating entropy over tokens, first generate set of answers, then cluster them base on semantic equivalence, before computing entropy

- clustering is done via an LM that tests entailment e.g. E.g., “The capital of France is Paris.” entails “Paris is the capital of France.” because they mean the same thing

- Can LLMs Express Their Uncertainty? An Empirical Evaluation of Confidence Elicitation in LLMs (xiong…hooi, 2023)

- verbalized uncertainty - model outputs its own uncertainty

- consistency-based uncertainty - consistency between output generations

- Quantifying Uncertainty in Natural Language Explanations of LLMs (tanneru…lakkaraju, 2023)

- probing uncertainty (like consistency-based uncertainty above) - applies input perturbations (e.g., paraphrasing) and measure the consistency of the resulting explanations

- verbalized uncertainty of explanations often performs poorly

- Relying on the Unreliable: The Impact of Language Models’ Reluctance to Express Uncertainty (zhou…sap, 2024)

- LMs are often unable to express uncertainties

- LM confidences tend to be overconfident

- users rely heavily on LM generations, whether or not they are marked by certainty

- Teaching Models to Express Their Uncertainty in Words (Lin et al., 2022) - GPT3 can generate both an answer and a level of confidence (e.g. “90% confidence”)

- Decomposing Uncertainty for LLMs through Input Clarification Ensembling (hou…zhang, 2023)

- Semantic Uncertainty (kuhn, gal, & farquhar, 2023) - instead of calculating entropy over tokens, first generate set of answers, then cluster them base on semantic equivalence, before computing entropy

misc

adaptation / transfer

These are transformer-specific. For more general notes, see 📌 transfer learning or 📌 uncertainty. Most of these approaches can be combined with metalearning.

- finetuning

- finetune all DNN params

- finetune linear layer on activations

- standard - train linear model on the embedding of the first token (usually an added

[CLS]token) (peters et al. 2018) - finetune linear model on all the activations

- e.g. evci, et al. 2022 - learn linear layer (using group-lasso) on features extracted from all layers

- standard - train linear model on the embedding of the first token (usually an added

- finetune specific DNN params (e.g. just the bias terms)

- Cutting Down on Prompts and Parameters (logan…sameer singh, riedel, 2021) - finetune only the bias terms; works even with null prompts

- BitFit: Simple Parameter-efficient Fine-tuning for Transformer-based Masked Language-models (zaken, ravfogel, & goldberg, 2021) - finetune only bias terms

- adapter - finetune lightweight layers on top of pre-trained layers (between finetuning all layers, and just finetuning a new layer)

- add some new layers and retrain some specific things (all human choices)

- side-tuning (zhang, sax…malik, 2020) - train a “side” network that is fused with the pretrained model via summation

- Combining Modular Skills in Multitask Learning (ponti, sordoni, bengio, & reddy, 2022) - learn adaptor with disentangled inventory of skills

- Parameter-Efficient Transfer Learning for NLP

- AdapterHub: A Framework for Adapting Transformers

- vaguely similar to adapter

- LoRA

- QLoRA: Efficient Finetuning of Quantized LLMs (dettmers, …, zettlemoyer, 2023)

- TOAST (shi, …, darrel, xin wang, 2023) - use top-down attention steering for efficient finetuning

- predict a mask

- ablate some model weights by training a binary mask over model parameters (Zhao et al., 2020; Radiya-Dixit and Wang, 2020)

- predict mask over attention heads

- prompting = few-shot learning = priming = in-context learning (starts with GPT)

- prompting without changing any model parameters

- limitation: can’t exploit sets longer than the training window

- MetaICL: Learning to Learn In Context (min et al. 2022) - tune LLM to do in-context learning on a large set of training tasks (few-show prompting and training time and at test-time)

- Visual Prompting via Image Inpainting (bar…darrell, globerson, efros, 2022 )

- PatternExploiting Training (PET) – Exploiting Cloze Questions for Few Shot Text Classification and Natural Language Inference (schick & schutze, 2021)

- cloze questions - same as masked language modeling: task is to replace some missing words

- use cloze-question templates (e.g. it was “good” or “bad”) to get soft labels for unlabeled data and then finetune on theses

- prompting without changing any model parameters

- prompt-tuning (also see next section on autoprompting)

- Attentional Mixtures of Soft Prompt Tuning for Parameter-efficient Multi-task Knowledge Sharing

- STT: Soft Template Tuning for Few-Shot Adaptation

- Mixture of Soft Prompts for Controllable Data Generation (chen, … yu, 203) - LLMs as Synthetic Data Generators for Training Smaller Models

mt-dnn line of work

- Multi-Task Deep Neural Networks for Natural Language Understanding (xiaodong liu … gao 2019) - multi-task learning on the 9 glue tasks (first layers are shared, then some task-specific layers at top)

- RAdam: On the Variance of the Adaptive Learning Rate and Beyond (liyuan liu…gao, han, 2020)

- usually need to do learning-rate warmup when trainin (e.g. with Adam)

- RAdam = add a term to rectify the variance of the adaptive learning rate in Adam

- SMART: Robust and Efficient Fine-Tuning for Pre-trained Natural Language Models through Principled Regularized Optimization (jiang…gao, zhao, 2020)

- Smoothness-inducing regularization, which effectively manages the complexity of the model

- Bregman proximal point optimization to prevent aggressive updating

- Microsoft Toolkit of Multi-Task Deep Neural Networks for Natural Language Understanding (xiaodong liu…gao, 2020)

- Posterior Differential Regularization with f-divergence for Improving Model Robustness (hao cheng, …, gao 2021)

- regularize model posterior difference between clean + noisy inputs (e.g. adversarially attacked inputs)

comparing different tasks

- Task2Vec: Task Embedding for Meta-Learning (achille, …, soatto, perona, 2019) - summarize each task as a vector, by taking diagonal of fisher info matrix (derivative of network output wrt to parameters) - clusters similar tasks

- Efficiently Tuned Parameters are Task Embeddings (zhou…mcauley, 2022)

- Editing Models with Task Arithmetic (ilharco, ribeiro, …, farhadi, 2022) - task vector is model weights after task finetuning - model weights before finetuning

- can use this direction to alter model behavior

- Overcoming Catastrophic Forgetting in Zero-Shot Cross-Lingual Generation (vu….constant, 2022) - train with prompts of some (language translation, task) pairs and show that they can generalize to new (language, task) pairs

instruction tuning / rlhf

-

Teach Llamas to Talk: Recent Progress in Instruction Tuning (gao blogpost 2023)

- Tell Your Model Where to Attend: Post-hoc Attention Steering for LLMs (zhang et al. 2023)

- The Truth is in There: Improving Reasoning in Language Models with Layer-Selective Rank Reduction (sharma…misra, 2023)

- human feedback

- Learning to summarize with human feedback (OpenAI, 2020)

- Can language models learn from explanations in context? (lampinen et al. 2022)

- natural language feedback (scheurer et al. 2022) - makes training more efficient

- Training Language Models with Language Feedback at Scale (scheurer et al. 2023)

- Explanation-based Finetuning Makes Models More Robust to Spurious Cues (ludan…callison-burch, 2023)

- Post hoc explanations of language models can improve language models (krishna…singh, lakkaraju, 2023) - use rationales as corrective signals for LLMs

- RLAIF: Scaling Reinforcement Learning from Human Feedback with AI Feedback (lee…rastogi, 2023)

- Tuning Language Models by Proxy (liu…choi, smith, 2024)

- Self-Rewarding Language Models (yuan…weston, 2024)

model merging

Model merging (some of these are non-transformer papers) = combine different models that have the same architecture (see collection of papers here and huggingface blog post here). Also see the review paper Deep Model Fusion: A Survey (li…shen, 2023)

- standard methods (see mergekit package)

- linear averaging, e.g. model soups (wortsman…schmidt, 2021)

- spherical linear interpolation - interpolate angle but keep norm constant

- TIES: Resolving Interference When Merging Models (yadav…raffel, bansal, 2023)

- only keep top-k% most significant changes in weights

- vote on signs of parameters

- DARE (yu…li 2023)

- randomly reset $p$ fraction of changed fine-tuned weights to their original values in the base model

- rescale remaining changed weights by $1/(1-p)$

- passthrough/frankenmerging

- stack layers to yield model with different size

- e.g. depth up-scaling creates a larger model by merging some layers and copying others (solar 10.7B, kim…kim, 2023)

- more complex posthoc methods

- Learning to Route Among Specialized Experts for Zero-Shot Generalization (muqeeth, …, raffel, 2024) - PHATGOOSE routes to different LoRA model for each token and at each layer

- Fisher-Weighted Averaging (matena & raffel, 2022) - merge models with same architecture with particular weights

- Git Re-Basin: Merging Models modulo Permutation Symmetries (ainsworth, hayase, & srinivasa, 2022) - permute units of one model to align them with a reference model before merging; supports linear mode connectivity between ResNet models on CIFAR

- ZipIt! Merging Models from Different Tasks without Training (stoica…hoffman, 2023) - layerwise merging & don’t merge all the layers

- Model Merging by Uncertainty-Based Gradient Matching (adheim…khan, 2023)

- UnIVAL: multimodal merging (shukor…cord, 2023)

- Multimodal Model Merging (sung…bansal, wang, 2023) - merge a separately trained vision & language model and get a multiomodal model

- LoraHub (huang…lin, 2023) - fiven examples from a new task, merge LoRA adaptors

- AdaMerging: Adaptive Model Merging for Multi-Task Learning (yang…tao, 2023) - learn coefficients to average models by minimizing entropy on unlabeled test samples

- Model Ratatouille: Recycling Diverse Models for Out-of-Distribution Generalization (rame…bottou, lopez-paz, 2022) - finetune many models initially trained on diverse tasks then average their weights

- Diverse Weight Averaging for Out-of-Distribution Generalization (rame…cord, 2023)

- training paradigms

- Branch-Train-Merge: ELMS (Expert LMs) (li…smith, zettlemoyer 2022)

- parallel language model of smaller expert LMs

- each can be added/removed, ensembled, or parameter-averaged at any time for efficient scaling and rapid customization

- improves perplexities, when controlling for training cost

- require expert domain specialization

- Cluster-Branch-Train-Merge (gururangan…smith, zettlemoyer, 2023) - start by clustering data to do unsupervised domain discovery

- Branch-Train-Merge: ELMS (Expert LMs) (li…smith, zettlemoyer 2022)

- fit many models into one

- superposition of many models into one (cheung…olshausen, 2019) - both during training/testing models are indexed via a high-dim key for each task

- supermasks in superposition (wortsman, …, yosinski, farhadi, 2020) - randomly fixed base net + for each task finds subnet that performs well

- if task identity not given, correct subnet inferred by minimizing output entropy

- non-transformer

- snapshot ensembles - average different checkpoints during training (huang et al. 2017)

- stochastic weight averaging (izmailov, …, wilson, 2019) - average multiple checkpoints during training

- batch ensemble (wen et al. 2020) - have several rank-1 keys that index different weights hidden within one neural net

- data-based distillation for model merging (roth…akata, 2024) - can combine multiple models that excel at different classes using data-based distillation

- Model Fusion via Optimal Transport (singh & jaggi, 2019) - layer-wise fusion algorithm using optimal transport

- Qualitatively characterizing neural network optimization problems (goodfellow, viynals, & saxe, 2014) - linear interpolation experiments on DNNs

editing

Editing is generally very similar to just adaptation/finetuning. One distinction is that it tends to try to keep changes localized, in an effort not to affect performance for most of the model.

- Tell Your Model Where to Attend: Post-hoc Attention Steering for LLMs (zhang, singh, liu, liu, yu, gao, zhao, 2023) - upweight attention scores at specific positions to improve LLM controllability

- Editing LLMs: Problems, Methods, and Opportunities (yao, …, zhang, 2023)

- model-editing = data-efficient alterations to a model

- memory-based

- SERAC: Memory-Based Model Editing at Scale (mitchell…manning, finn, 2022)

- keep track of list of edits in external memory and use them as appropriate context at test time (don’t finetune the model)

- T-Patcher (Huang et al., 2023) and CaliNET (Dong et al., 2022) introduce extra trainable parameters into the feed- forward module of PLMs

- SERAC: Memory-Based Model Editing at Scale (mitchell…manning, finn, 2022)

- weight updates

- Knowledge Neurons in Pretrained Transformers (dai et al. 2021) - integrated gradients wrt to each neuron in BERT, then selectively udpate these neurons

- ROME: Locating and Editing Factual Associations in GPT (meng, bau et al. 2022 )

- localize factual associations - causal intervention for identifying neuron activations that are decisive in a model’s factual predictions

- “causal traces” - run net multiple times, introducing corruptions and then restore states from original non-corrupted forward pass to see which states can restore the original results

- a small number of states contain info that can flip the model from one state to another

- change factual associations - modify feedforward weights to update specific factual associations using Rank-One Model Editing (ROME)

- MEMIT: Mass Editing Memory in a Transformer (meng…, bau, 2022)

- Aging with GRACE: Lifelong Model Editing with Discrete Key-Value Adapters (hartvigsen, …, palangi, …, ghassemi, 2023)

- Flexible Model Interpretability through Natural Language Model Editing (d’oosterlinck, …, potts, 2023)

- Model Editing with Canonical Examples (hewitt, …, liang, manning, 2024)

- localize factual associations - causal intervention for identifying neuron activations that are decisive in a model’s factual predictions

- meta-learning

- KnowledgeEditor: Editing Factual Knowledge in Language Models (de cao, aziz, & titov, 2021) - train a network that takes in input, output, edit and predicts a weight update to the model

- MEND: Fast model editing at scale (mitchell…finn, manning, 2022)

- a collection of small auxiliary editing networks that use a single desired input-output pair to edit a pre-trained model

- MEND learns to transform the gradient obtained by standard fine-tuning, using a low-rank decomposition of the gradient

- REMEDI (hernandez, li, & andreas, 2023) and related activation engineering

- get “edit vectors” by obtaining embeddings when passing attributes through LLM

- perform edit by by adding linear transformation of edit vector to prompt embedding

- then, perform generation with latent embedding

- learn linear transformation given a dataset of examples with attributes and desired completions

- (also regularize the model to not change too much on other stuff)

- Activation Addition: Steering Language Models Without Optimization (turner…macdiarmid, 2023)

- blog post: activation engineering: Steering GPT-2-XL by adding an activation vector (turner, …, mini, 2023)

- obtain “steering vector” by embedding a phrase (e.g. love) and adding that vector to the llm embedding during generation

- they only add the embedding for some layers for some tokens

- Extracting Latent Steering Vectors from Pretrained Language Models (subramani, …, peters, 2022) - find latent vectors via optimization that cause an LLM to output a particular sequence

- then, use these vectors to do things like transfer to new tasks / compute textual similarity

- Function Vectors in LLMs (todd…wallace, bau, 2023)

- PURR: Efficiently Editing Language Model Hallucinations by Denoising Language Model Corruptions (chen…sameer singh…kelvin guu, 2023)

- new datasets

- MQUAKE: Assessing Knowledge Editing in Language Models via Multi-Hop Questions (zhong…manning, potts, chen, 2023) - introduces benchmark MQUAKE + method MeLLo, which stores edited facts externally while prompting the language model iteratively to generate answers that are consistent with the edited facts

- COUNTERFACT+ benchmark - checks that edits don’t affect existing info

- ALMANACS: A Simulatability Benchmark for Language Model Explainability

- model unlearning approaches (see review Rethinking Machine Unlearning for LLMs, liu et al. 2024)

- gradient ascent - worsen performance on set of examples to forget

- gradient descent - improve performance on examples labeled with hidden info, e.g. response “I don’t know”

- localization-informed unlearning, e.g. ROME

- influence function-based methods

- prompt-based (e.g. only change prompt rather than model parameters)

direct weight inspection

- overviews

- Overview of mechanistic interpretability (nanda, 2022+)

- review paper (rauker…hadfield-menell, 2023)

- Representation engineering: A Top-Down Approach to AI Transparency (zou…kolter, hendrycks, 2023)

- representation engineering (RepE) - analyzes representations/representation transformations rather than neurons or circuits

- basically extends probing to more general tasks, including model control

- Transformer visualization via dictionary learning: contextualized embedding as a linear superposition of transformer factors (yun, chen, olshausen, lecun, 2021) - investigate LLM embeddings of different words using dictionary learning

- LLMs produce interesting contextualized word embeddings

- dictionary elements (of activations across layers) correspond to meaningful things

- dictionary element has size $d$, the embedding size

- given list of sentences $S$, training matrix has size $\left(\underbrace{\text{num_layers}}{\text{12 for BERT}} \cdot \sum{s \in S} \text{len(s)}\right) \times \underbrace{d}_{\text{768 for BERT}}$

- dictionary coefficient: maps (text, layer, sequence_index) $\to$ coefficient

- extract $d$-dimensional embedding for text at specified layer & sequence_index

- Neuron-level Interpretation of Deep NLP Models: A Survey (sajjad et al. 2022)

- previous works generally use pre-specified concepts, and focus on

- concept search - given a neuron find its concept(s)

- neuron search - (ii) given a concept find its matching neuron(s)

- concept search

- visualization, e.g. karpathy, johnson, fei-fei li, 2015 visualize LSTM head response in text

- elicit top-k ngram responses on a corpus, which are then labelled manually (kadar et al. 2017)

- elicit top-k activating sentences from a corpus, which are then summarized using a parse tree into a synthetic explanation (na…kim, 2019)

- limitation: the explanation may be ungrammatical and biased towards something arbitrary (like reptition)

- input maximization (e.g. textattack, poerner et al. 2018)

- Evaluating Neuron Interpretation Methods of NLP Models (fan…sajjad, 2023) - metric is how well evaluation from one method matches the other ones

- previous works generally use pre-specified concepts, and focus on

- A Circuit for Indirect Object Identification in GPT-2 small (wang, …, steinhardt, 2022)

- explanation encompasses 26 attention heads grouped into 7 main classes

- task: indirect object identification - “When Mary and John went to the store, John gave a drink to ___” should be “Mary”

- circuit

- identify all previous names

- remove duplicated names

- output remaining name

- Circuit Component Reuse Across Tasks in Transformer Language Models (merullo, eickhoff, & pavlick 2024) - find that the same circuit is used for 2 different tasks: IOI from above and Colored objects (from big-bench)

- Interpretability at Scale: Identifying Causal Mechanisms in Alpaca (wu…, potts, goodman, 2023) - propose boundless DAS and automatically identify a circuit for math

- builds on DAS (geiger, …goodman, 2023)

- N2G: A Scalable Approach for Quantifying Interpretable Neuron Representations in LLMs (foote, nanda, …, barez, 2023) - explain each neuron in a graph

- Finding Skill Neurons in Pre-trained Transformer-based Language Models (wang et al. 2022) - some individual neurons are predictive of the final task (dubbed “skill neurons’)

- thread (elhage…olah, 2021)

- all layers are same dimension and each attention block adds a vector to it

- Although they’re parameterized as separate matrices, $W_O W_V$ and $W_Q^T W_K$ can always be thought of as individual, low-rank matrices

- $x \in \mathbb R^{d_{embed} \times d_{sequence}}$: $d_{embed}$ can be hundreds - tens of thousands

- $W_Q, W_K, W_V \in \mathbb R^{d_{attn} \times d_{embed}}$

- $W_Q^TW_k \in \mathbb R ^{d_{embed} \times d_{embed}}$

- $W_O \in \mathbb R^{d_{embed} \times d_{attn}}$: projects attention values back to embedding dimention

- $W_O W_V \in \mathbb R ^{d_{embed} \times d_{embed}}$

- $W_E \in \mathbb R^{d_{embed} \times d_{vocab}}$ embeds initial tokens and $W_U \in \mathbb R^{d_{vocab} \times d_{embed}}$ undoes the embedding

- $d_{vocab}$ can be very large, e.g. 50k

- $A = \text{softmax}(x^TW_Q^TW_kx) \in \mathbb R^{d_{sequence} \times d_{sequence}}$

- if we have a 0-layer net (e.g. predict next token with linear layer given current token), we just learn bigram log-likelihood

- 2 circuits

- QK circuit determines which “source” token the present “destination” token attends back to and copies information from

- $W_{E}^{T} W_{Q}^{T} W_{K} W_{E} \in \mathbb R ^{d_{vocab} \times d_{vocab}}$

- OV circuit describes what the resulting effect on the “out” predictions for the next token is

- $W_{U} W_{O} W_{V} W_{E} \in \mathbb R ^{d_{vocab} \times d_{vocab}}$

- QK circuit determines which “source” token the present “destination” token attends back to and copies information from

- if a single head increases the probability of both

keep… in mindandkeep… at bay, it must also increase the probability ofkeep… in bayandkeep… at mind - induction heads search previous examples of present token

- If they don’t find it, they attend to the first token and do nothing

- if they do find it, they then look at the next token and copy it. This allows them to repeat previous sequences of tokens, both exactly and approximately

- sometimes can do some kind of “fuzzy” matching

- tensor/kronecker product $\bigotimes$:

- Left-right multiplying: Multiplying $x$ by a tensor product $A \otimes W$ is equivalent to simultaneously left and right multiplying: $(A \otimes W) x=A x W^{T}$

- When we add them, it is equivalent to adding the results of this multiplication: $\left(A_{1} \otimes W_{1}+A_{2} \otimes W_{2}\right) x=A_{1} x W_{1}^{T}+A_{2} x W_{2}^{T}$ Softmax Linear Units

- replacing activation function with softmax linear unit increases fraction of MLP neurons which are “interpretable”, i.e. correspond to meaningful features

- however, may “hide” some non-neuron-aligned features by decreasing their magnitude and then later recovering it with LayerNorm

- the presence of nonlinear activation functions createse an incentive for features to align with this basis and not get superposed

- if the gains to sparse coding are large enough, this incentive will get overwhelmed

- ways to combat polysemanticity

- activation sparsity

- lateral inhibition / co-occurrence sparsity

- weight sparsity

- superlinear activation functions

- increase neurons per param

- $\text{SoLU}(x) = x \cdot \text{softmax}(x)$

- adds lateral inhibition, superlinearity, approximate sparsity

- changes GeLU, which is approximately $\text{sigmoid}(1.7x) \cdot x$

- just changing to SoLU decrease performance, had to add LayerNorm afterwards

- logit lens (2020) - apply unembedding matrix to outputs of each transformer layer

- tuned-lens (belrose…steinhardt, 2023) - train linear model for each layer to decode vocab

- Analyzing Transformers in Embedding Space (dar, …, berant, 2022) - apply unembeddix matrix to weights, etc. to interpret transformers

- In-Context Language Learning: Architectures and Algorithms (akyurek…andreas, 2024) - find evidence for “n-gram heads”, higher-order variants of previously seen “induction heads”

- Zoology: Measuring and Improving Recall in Efficient Language Models (arora…rudra, & re, 2023) - also find evidence for ngram heads

- A Phase Transition between Positional and Semantic Learning in a Solvable Model of Dot-Product Attention (cui…zdeborova, 2024) - solve 1-layer attention model for histogram task and find phase transition

- Rosetta Neurons: Mining the Common Units in a Model Zoo (dravid, …, efros, shocher, 2023)

- Multimodal Neurons in Pretrained Text-Only Transformers (schwettmann…torralba, 2023)

- Interpreting CLIP’s Image Representation via Text-Based Decomposition (gandelsman, efros, & steinhardt, 2023)

- Universal Neurons in GPT2 Language Models (gurnee…nanda, & bertsimas, 2024) - study the universality of neurons across GPT2 models trained from different initial random seeds

- The Hydra Effect: Emergent Self-repair in Language Model Computations (mcgrath…legg, 2023) - ablations atone attention layer of an LLM cause another layer to compensate

- Neurons in LLMs: Dead, N-gram, Positional (voita, ferrando, & nalmpantis, 2023)

- Vision transformers need registers (darcet…mairal, bojanowski, 2023)

- adding extra [reg1], [reg2] tokens that aren’t used at output improve vision transformer performance and attention map interpretability

- without these tokens, attention maps are sometimes very noisy, particularly for uninformative tokens

- Efficient Streaming Language Models with Attention Sinks (xiao…lewis, 2023)

- Codebook Features: Sparse and Discrete Interpretability for Neural Networks (tamkin, taufeeque, & goodman, 2023)

- Patchscope (ghandeharioun…geva, 2023) - decode LLM’s representation of a token by asking another copy of it to decode from that same representation

- Program synthesis via mechanistic interpretability (michaud…tegmark) - condense RNN on simple algorithmic tasks into code

- Linear Representations of Sentiment in LLMs (tigges…nanda, 2023) - sentiment is distributed across tokens (not just at sentiment-laden words)

debugging / interpretation

- TalkToModel: Understanding Machine Learning Models With Open Ended Dialogues (slack…lakkaraju, sameer singh, 2022) - natural language interface to query model (by converting to commands such as filtering the data / calculating importance)

- Rethinking Explainability as a Dialogue: A Practitioner’s Perspective (lakkaraju, slack, …, sameer singh, 2022) - interviews with high-stakes users suggest they would like to be able to interact with systems via dialog

- AdaTest: Adaptive Testing and Debugging of NLP Models (ribeiro & lundberg, 2022)

- goal: easily specify, discover, and fix undesirable behaviors in an NLP model

- 2-step iterative algorithm

-

LLM generates many tests targeting the model’s failures

-

example of a test:

f(“I am a black woman”) ≠ neg -

user selects and organizes the tests and reprompts the LLM to find more

-

-

User fixes the tests (e.g. via finetuning)

-

- Checklist –Beyond Accuracy: Behavioral Testing of NLP models with CheckList (ribeiro…sameer singh, 2020)

- matrix of general linguistic capabilities + test types

- Fixing Model Bugs with Natural Language Patches (murty, manning, lundberg, & ribeiro 2022)

- specify patches with natural language rather than hard rule, allowing them to better handle text

- finetune a model to combine original model output with output from a patch-conditioned interpreter head

- interpretable models

- Aug-imodels: Augmenting Interpretable Models with LLMs during Training (singh, askari, caruana, & gao, 2023)

security

- Interpretability and Transparency-Driven Detection and Transformation of Textual Adversarial Examples (IT-DT) (sabir, babar, & abuadbba, 2023)

- leverages techniques such as attention maps, integrated gradients, and model feedback to detect and then change adversarial inputs

- BEAST: Fast Adversarial Attacks on Language Models In One GPU Minute (sadasivan…feizi, 2024)

- sample attacks using beam search and tokens that induce strong issues

- datasets: harmbench & trustllm

- attacks from TextAttack:

| Attack Recipe Name | Goal Function | Constraints Enforced | Transformation | Search Method | Main Idea |

|---|---|---|---|---|---|

a2t |

Untargeted {Classification, Entailment} | Percentage of words perturbed, Word embedding distance, DistilBERT sentence encoding cosine similarity, part-of-speech consistency | Counter-fitted word embedding swap (or) BERT Masked Token Prediction | Greedy-WIR (gradient) | Yoo et al., 2021 |

alzantot |

Untargeted {Classification, Entailment} | Percentage of words perturbed, Language Model perplexity, Word embedding distance | Counter-fitted word embedding swap | Genetic Algorithm | Alzantot et al., 2018 |

bae |

Untargeted Classification | USE sentence encoding cosine similarity | BERT Masked Token Prediction | Greedy-WIR | Garg & Ramakrishnan, 2019. |

bert-attack |

Untargeted Classification | USE sentence encoding cosine similarity, Maximum number of words perturbed | BERT Masked Token Prediction (with subword expansion) | Greedy-WIR | Li et al., 2020 |

checklist |

{Untargeted, Targeted} Classification | checklist distance | contract, extend, and substitutes name entities | Greedy-WIR | Ribeiro et al., 2020 |

clare |

Untargeted {Classification, Entailment} | USE sentence encoding cosine similarity | RoBERTa Masked Prediction for token swap, insert and merge | Greedy | Li et al., 2020 |

deepwordbug |

{Untargeted, Targeted} Classification | Levenshtein edit distance | {Character Insertion, Character Deletion, Neighboring Character Swap, Character Substitution} | Greedy-WIR | Gao et al., 2018 |

fast-alzantot |

Untargeted {Classification, Entailment} | Percentage of words perturbed, Language Model perplexity, Word embedding distance | Counter-fitted word embedding swap | Genetic Algorithm | Jia et al., 2019 |

hotflip |

Untargeted Classification | Word Embedding Cosine Similarity, Part-of-speech match, Number of words perturbed | Gradient-Based Word Swap | Beam search | Ebrahimi et al., 2017 |

iga |

Untargeted {Classification, Entailment} | Percentage of words perturbed, Word embedding distance | Counter-fitted word embedding swap | Genetic Algorithm | Wang et al., 2019 |

input-reduction |

Input Reduction | Word deletion | Greedy-WIR | Feng et al., 2018 | |

kuleshov |

Untargeted Classification | Thought vector encoding cosine similarity, Language model similarity probability | Counter-fitted word embedding swap | Greedy word swap | Kuleshov et al., 2018 |

pruthi |

Untargeted Classification | Minimum word length, Maximum number of words perturbed | {Neighboring Character Swap, Character Deletion, Character Insertion, Keyboard-Based Character Swap} | Greedy search | Pruthi et al., 2019 |

pso |

Untargeted Classification | HowNet Word Swap | Particle Swarm Optimization | Zang et al., 2020 | |

pwws |

Untargeted Classification | WordNet-based synonym swap | Greedy-WIR (saliency) | Ren et al., 2019 | |

textbugger |

Untargeted Classification | USE sentence encoding cosine similarity | {Character Insertion, Character Deletion, Neighboring Character Swap, Character Substitution} | Greedy-WIR | Li et al., 2018. |

textfooler |

Untargeted {Classification, Entailment} | Word Embedding Distance, Part-of-speech match, USE sentence encoding cosine similarity | Counter-fitted word embedding swap | Greedy-WIR | Jin et al., 2019 |

privacy

- Training Data Extraction From Pre-trained Language Models: A Survey (ishihara, 2023)

- definitions

- (eidetic memorization). A string s is k-eidetic memorized by LLMf if a prompt p exists such that f(p) = s and s appears at most k times in the training set

- slightly different definition: A string s is k-memorized with k tokens of context from LLM f if a (length-k) string p exists such that the concatenation p + s is contained in the training set, and f produces s when prompted with p by using greedy decoding

- Differential privacy = removing any data from the training set should not considerably change trained models

- counterfactual memorization = difference between a training data’s expected loss under a model that has and has not been trained on that data

- some studies loosen the definition of memorization using a similarity metric for strings rather than exact string matching

- (eidetic memorization). A string s is k-eidetic memorized by LLMf if a prompt p exists such that f(p) = s and s appears at most k times in the training set

- definitions

- Extracting Training Data from LLMs (carlini, …, raffel, 2021) - LLMs are particularly likely to memorize atypical data points

- Quantifying Memorization Across Neural Language Models (carlini, …, zhang, 2022)

- What does it mean for a language model to preserve privacy? (brown, …, tramer, 2022) - “privacy-preserving” LM should guarantee that a user’s data cannot ever appear (or be inferable) outside the context they originally expected it to appear in

- Can Neural Network Memorization Be Localized? (maini, …, lipton, kolter, zhang, 2023) - memorization is often confined to a small number of neurons or channels, propose example-tied dropout to direct memorization to few neurons

- Detecting Personal Information in Training Corpora: an Analysis (subramani, luccioni, dodge, & mitchell, 2023)

architecture/attention variants

- state space models (good overview in albert gu thesis, 2023)

- S4: structured state space models (gu…re, 2022) - similar to RNNs but can predict all outputs at once via convolution

- the core of the state space model is basically a linear RNN

- inputs x, hidden states h, outputs y

- 3 matrices: $A, B, C$

- $y_i = C h_i$

- $h_i = A h_{i-1} + B x_i$

- note: there is no nonlinearity between hidden states

- note: the transition from one hidden state to the next is the same for all positions (except for the input)

- can compute hidden states simultaneously by just pre-multiplying these A and B matrices with x the right number of times ( a convolution operation)

- the core of the state space model is basically a linear RNN

- mamba: selective state space models (gu & dao, 2023)

- changes (2) above – the transition from one hidden state to the next now depends on the input (making it closer to LSTMs)

- $B = B(x)$

- $C = C(x)$

- changes (2) above – the transition from one hidden state to the next now depends on the input (making it closer to LSTMs)

- RNNs are not Transformers (Yet): The Key Bottleneck on In-context Retrieval (wen, dang, & lyu, 2024) - RNNs fail to retrieve info from long contexts, RAG helps

- Tree Transformer: Integrating Tree Structures into Self-Attention (wang, .., chen, 2019)

- Waveformer: Linear-Time Attention with Forward and Backward Wavelet Transform (zhuang…shang, 2022)

- S4: structured state space models (gu…re, 2022) - similar to RNNs but can predict all outputs at once via convolution

- White-Box Transformers via Sparse Rate Reduction: Compression Is All There Is? (yaodong yu…yi ma, 2023)

mixture of experts (MoE) / routing

mixture of experts models have become popular because of the need for (1) fast speed / low memory at test time while still (2) having a large model during training

- note: nowadays often the “experts” are different MLPs following the self-attention layers

- A Review of Sparse Expert Models in Deep Learning (fedus, jeff dean, zoph, 2022)

- sparsity decouples the parameter count from the compute per example allowing for extremely large, but efficient models

- routing algorithm - determines where to send examples

- discreteness makes it difficult

- some works use RL to learn routing

- standard approach uses gumbel-softmax

- usually get matrix of similarities between input tokens and experts and route based on these

- sometimes route to topk experts rather than top1

- load balancing - usually add an auxiliary loss to encourage equal tokens being sent to different experts

- discreteness makes it difficult

- non-specialized experts

- Early versions (Jacobs, michael jordan, nowlan, & hinton, 1991) had independent feed-forward networks serving as experts

- Sparsely-gated MOE layer (Shazeer…quoc le, hinton, dean, 2017) have been studied with token-based routing with backprop

- replace FFN in transformers with expert layers

- GShard Lepikhin et al. (2021), which appplies this concept to machine translation

- Switch transformers (Fedus et al. (2022)) simplifies the architecture to activation of only one expert per layer

- BASE Layers Lewis et al. (2021) - find an alternative approach to routing by formulating it as a linear assignment problem

- Hash layers Roller et al. (2021) use a fixed hash as the gating function

- routing notes - make hard decision but still want to learn probabilities

- straight-through estimator (STE) - take the argmax during the forward pass, while considering the original probabilities in the backward pass

- highly biased

- gumbel-softmax- allows for better sampling

- straight-through estimator (STE) - take the argmax during the forward pass, while considering the original probabilities in the backward pass

- specialized experts as fully independent models (sometimes for multi-task learning)

- DEmix Layers (Gururangan…smith, zettlemoyer, 2021) – DEMix layers – placed in the feedforward layers of the Transformer – contain experts which specialize on specific domains. Routing at train time is determined only by the domain label, but all experts are activated at inference time and mixed according to weights estimated from a validation set

- Sparsely Activated Mixture-of-Experts are Robust Multi-Task Learners (gupta…awadallah, gao, 2022) - use task description to improve routing

- Pfeiffer et al. (2022) - multilingual expert model with language-specific routing

- task-level MoE Kudugunta et al. (2021) – multi-task expert model with task-specific routing

- scaling up

- OPT-MOE (artetxe et al. 2021)

- AutoMoE (jawahar, mukherjee, liu…gao, 2022)

- Towards Understanding Mixture of Experts in Deep Learning (chen…gu, li, 2022)

symbolic reasoning

See also notes on 📌 comp neuro.

- Compositional processing emerges in neural networks solving math problems (russin, roland fernandez, …, smolensky, gao, 2021)

- Modular Deep Learning (pfeiffer, ruder, .., ponti, 2023) - overview of different modular architectures

- neurocompositional computing (smolensky…gao, 2022)

-

longer tutorial (smolensky, …, gao, 2022)

- central paradox of cognition is that brain both uses continuous neural symbols but is compositional (smolensky et al. 1992)

- Compositionality

- Continuity - the encoding and processing of information is formalized with real numbers that vary continuously

- 3 challenges: compositional generalization, data efficiency, comprehensibility

- solution - NECST: Neurally-Encoded Compositionally-Structured Tensor computing (smolensky & legendre, 2006) - basically leverages TPR

- TPR roles and fillers can both be made continuous

- neural space vs symbolic space (many different things (e.g. sentences) can mean the same thing) - word vectors can be thought of as “soft symbols”

- want to move from symbolic repr. to neural repr. while keeping interpretability

- system should output intermediate steps in addition to answer

- thinking fast (system 1: fast, intuitive) + slow (system 2: slower, logical, derivative)

- concrete proposal: transformer activation vector should encode graph of flow through the network

- ex. task: regurgitate a sequence

-

- NECSTransformer: Enhancing the Transformer with Explicit Relational Encoding for Math Problem Solving (schlag, smolensky, …, schmidhuber, gao, 2019)

- TP-attention

- beat SOA on free-form math word-problems

- in addition to K, Q, V, also add a role-vector

- do element-wise multiplication of outputted vector with role-vector

- TPR built as outer product of 2 vectors:

- filler - the vector returned by attention

- ex. one head learns “second-argument-of”

- role - a relation conceptually labeling an edge of the attention graph

- filler - the vector returned by attention

- TP-N2F: Tensor Product Representation for Natural To Formal Language Generation - Microsoft Research (chen…gao, 2019)

- Logical Transformers: Infusing Logical Structures into Pre-Trained Language Models (wang, huang, …, gao, 2023) - use logical model to alter embeddings before feeding to LLM

- Implicit Chain of Thought Reasoning via Knowledge Distillation (deng…smolensky…, 2023)

embeddings

- detailed overview of info retrieval (bruch, 2024)

- Faiss: A library for efficient similarity search (Johnson et al 2019) - implement fast approximante nearest neighbor search

- introductory blog post on embeddings

- basic training pipeline

- standard self-supervised pre-training, e.g. BERT

- weak unsupervised pre-training, e.g. weakly related text pairs, such as QA pairs from forums like StackExchange and Quora

- high-quality contrastive finetuning on curated paired data, e.g. QA from web searches

- datasets

- MTEB leaderboard

- Instructor eval

- Billboard

- Prompt retrieval

- Long contexts

- Older

- Training

- Nomic 235M curated text pairs (mostly filtered from here)

- Followed by supervised contrastive fine-tuning on datasets like MSMarco, NQ, NLI, HotpotQA, Fever, WikiAnswers, etc.

- MEDI (from Instructor paper): combines 300 datasets from Super- NaturalInstructions with 30 datasets from existing collections designed for embedding training

- Nomic 235M curated text pairs (mostly filtered from here)

- customization

- e.g. add prompt or prefixes like search query, search document, classification, clustering before embedding so model knows how to match things

- top-performing models

- Repetition Improves Language Model Embeddings (springer, kotha, fried, neubig, & raghunathan, 2024)

-

Feed a prompt such as “Rewrite the sentence: x, rewritten sentence: x” to the language model and pool the contextualized embeddings of the 2nd occurence of x

-

include task-specific prefix like in E5-mistral-instruct

-

- E5-mistral-instruct: Improving Text Embeddings with LLMs (wang…wei, 2023) - finetune embeddings on synthetic data

- first prompt GPT-4 to brainstorm a list of potential retrieval tasks, and then generate (query, positive, hard negative) triplets for each task (GPT write the whole documents)

- builds on E5 (wang…wei, 2022)